We've heard your feedback: Nitric's powerful automation and abstraction can sometimes feel like magic, leaving you wanting more transparency about what happens under the hood. A common misconception with infrastructure automation is that you can't have the control you want when inferring your infrastructure requirements from application code. This article will help alleviate that concern – Nitric’s design is specifically intended to allow flexibility and control.

We’ve got documentation that introduces the concepts of Nitric at an overview level. This post will go into some of the finer details and bring more transparency into the framework.

Let’s walk through the entire journey, from starting your first line of code, to reviewing your deployed application and resources in the cloud console. At each step, I’ll show how Nitric automates the process of deploying and running an application in the cloud without maintaining a tightly coupled Terraform project.

Note: We’ll actually be maintaining reusable modules that can be leveraged across all of our applications instead… but more on that later.

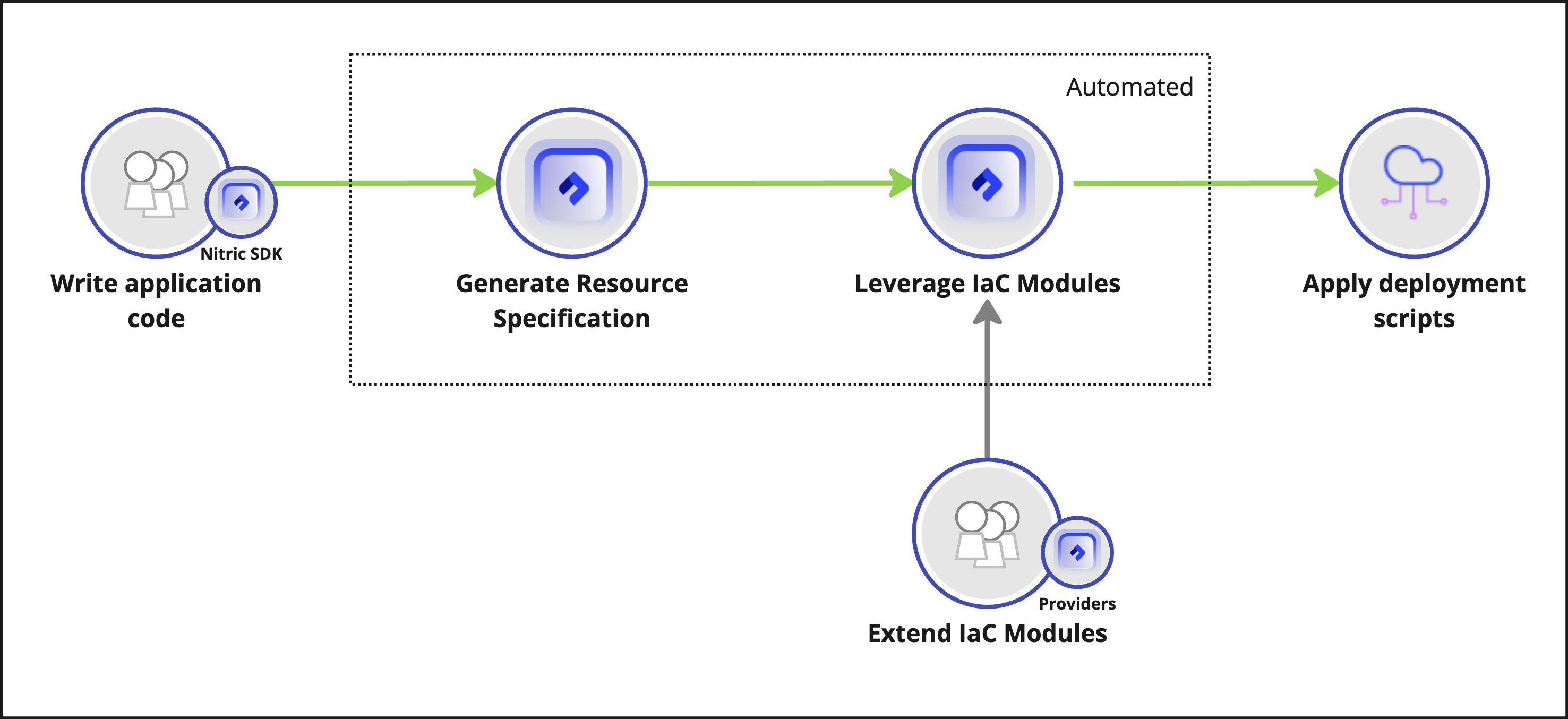

The journey involves the following activities with the green flow following the typical flow when first leveraging the Nitric framework.

Write Application Code

Writing applications with Nitric involves importing resources from one of the language SDKs. Nitric’s open source framework ships with the foundational resources listed in the table below, where you can also see what each deploys as in your respective cloud.

| Resource | AWS | Azure | Google Cloud |

|---|---|---|---|

| APIs | API Gateway | API Management | API Gateway |

| Key Value Store | DynamoDB | Table Storage | FireStore |

| Topics | SNS | Event Grid | PubSub |

| Queues | SQS | Storage Queues | PubSub |

| Schedules | CloudWatch Event Bridge | Dapr Binding | Cloud Scheduler |

| Secrets | Secrets Manager | Key Vault | Secret Manager |

| Storage | S3 | Blob Storage | Cloud Storage |

| Services/Handlers | Lambda | Container Apps | CloudRun |

Note: This table is current as of Jun 26, 2024. Please reference the documentation for the most up-to-date support.

A value of Nitric is that it isn’t critical for developers to understand exactly which runtime will be used when writing your first line of code. Since the framework encourages portability, you can choose to deploy to the cloud that most suits your needs at any time. Plus, the Nitric CLI will emulate all of these resources offline so that you can begin developing your application immediately without incurring cloud costs, and maybe more importantly, without having to individually set up development environments for your team.

Here is an application written in Python that imports an API and a bucket resource from the Python SDK. We’ve defined a single route handler which provides a download url from a bucket resource.

from nitric.resources import api, topic, bucket

from nitric.application import Nitric

from nitric.context import HttpContext

main = api("main_api")

images = bucket("images").allow("deleting","writing")

@main.get("/url")

async def hello_world(ctx: HttpContext):

download_url = await images.file('duck.png').download_url(3600)

ctx.res.body = download_url

Nitric.run()

With only the information in this code snippet, Nitric actually has enough information to create a resource specification that communicates the runtime requirements of the application. Without Nitric, developer and operations teams would have to do this manually. With Nitric’s language SDKs bound to a contract, the framework can automatically extract this configuration into a specification, as I’ll describe next.

Nitric Generates Resource Specification

The ability to infer requirements directly from an application demonstrates a new concept in the infrastructure space that has been coined ‘Infrastructure from Code’ (IfC). The key principle here is that we can automatically generate ‘live documentation’ of the runtime requirements of an application.

As I mentioned above, the language SDKS are built from contracts, which means that the Nitric framework is able to recognize and collect resources that are being leveraged in the solution. The framework creates a map of the hierarchical relationship of the resources; along the way ids and types are assigned which can be used to uniquely identify each resource during deployment. This is extremely important when it comes to deploying these resources, as it helps to ensure that only the least permissions are configured to allow them to communicate with each other. From the application code snippet above the framework gathers the following information.

Bucket Resource:

- ID: images

- Config: Default setup.

API Resource:

- ID: main_api

- Config: OpenAPI 3.0.1 document for an API with a GET method at /url, handled by the function hello-world_services-hello.

Policy Resource:

- ID: c26b107582b33de1660950c440ee2ef7

- Config: Policy allowing actions on the images bucket for the hello-world_services-hello service.

Service Resource:

- ID: hello-world_services-hello

- Config: Service with an image hello-world_services-hello, 1 worker, and an environment variable NITRIC_BETA_PROVIDERS set to true.

The verbose internal representation is shown below. Fun fact: the summary above was created by parsing this specification into GPT and requesting a summary without superfluous details.

{

"spec": {

"resources": [

{

"id": {

"name": "images"

},

"Config": {

"Bucket": {}

}

},

{

"id": {

"name": "main_api"

},

"Config": {

"Api": {

"Document": {

"Openapi":

"{\"components\":{},\"info\":{\"title\":\"main_api\",\"version\":\"v1\"},\"openapi\":\"3.0.1\",\"paths\":{\"/url\":{\"get\"

:{\"operationId\":\"urlget\",\"responses\":{\"default\":{\"description\":\"\"}},\"x-

nitric-target\":{\"name\":\"hello-world_services-hello\",\"type\":\"function\"}}}}}"

}

}

}

},

{

"id": {

"name": "c26b107582b33de1660950c440ee2ef7"

},

"Config": {

"Policy": {

"principals": [

{

"id": {

"name": "hello-world_services-hello"

},

"Config": null

}

],

"actions": [

"deleting",

"writing"

],

"resources": [

{

"id": {

"name": "images"

},

"Config": null

}

]

}

}

},

{

"id": {

"name": "hello-world_services-hello"

},

"Config": {

"Service": {

"Source": {

"Image": {

"uri": "hello-world_services-hello"

}

},

"workers": 1,

"type": "default",

"env": {

}

}

}

}

]

},

}

Nitric also uses this specification to generate live visualizations of the resource hierarchy, which you can access through the developer dashboard.

Nitric Leverages IaC Modules

Once Nitric generates the resource specification, the framework can then interpret it to compose deployment scripts.

As a refresher, the specification is a collection of resources, requests for permission and the hierarchy of resources relationships. To create deployment scripts, NItric maps each of these components to one or many blocks of scripts which are capable of configuring the cloud appropriately.

Nitric maps the request for a resource in the specification with a runtime and a deployment plugin.

- Runtime plugins are lightweight adapters that translate requests from a Language SDK to the cloud-specific APIs of your cloud.

- Deployment plugins are responsible for provisioning and configuring the cloud resources defined by your application, typically using IaC modules written in Terraform or Pulumi.

A combination of a Runtime and Deployment Plugin in Nitric is called a provider. Nitric has standard providers for several clouds using Pulumi as the IaC to deploy your application directly to the cloud. These are a good place for most teams to start, but you can also build custom providers to fit your project’s needs.

Nitric also has Terraform providers available to the community in preview. These act more like a Transpiler for the cloud infrastructure requirements, allowing Nitric to generate a Terraform stack using CDKTF.

Regardless of which IaC you choose, your provider will fulfill the request for a resource as per the table shown previously (unless you make customizations as described in the extension section further down). For example, when the application requests a bucket and the target cloud provider is AWS and the IaC provider is Terraform, then a Terraform IaC module that is capable of provisioning an S3 Bucket is assigned.

How it works

Let’s take a look at a deployment plugin written in Terraform for an API. By examining the outputted Terraform, we gain transparency into exactly how Nitric helps provision infrastructure, demystifying infrastructure management and making it accessible without sacrificing the control and customization developers and operations teams need.

At deployment time, Nitric will scan through the specifications and substitute values into this Terraform module. In this example, we’re looking at a Terraform Provider for AWS, however the same application and resource specification could also be deployed to GCP using a Terraform or Pulumi provider.

resource "aws_apigatewayv2_api" "api_gateway" {

name = var.name

protocol_type = "HTTP"

body = var.spec

tags = {

"x-nitric-${var.stack_id}-name" = var.name

"x-nitric-${var.stack_id}-type" = "api"

}

}

resource "aws_apigatewayv2_stage" "stage" {

api_id = aws_apigatewayv2_api.api_gateway.id

name = "$default"

auto_deploy = true

}

resource "aws_lambda_permission" "apigw_lambda" {

for_each = var.target_lambda_functions

action = "lambda:InvokeFunction"

function_name = each.value

principal = "apigateway.amazonaws.com"

source_arn = "${aws_apigatewayv2_api.api_gateway.execution_arn}/*/*/*"

}

data "aws_acm_certificate" "cert" {

for_each = var.domains

domain = each.value

}

resource "aws_apigatewayv2_domain_name" "domain" {

for_each = var.domains

domain_name = each.value

domain_name_configuration {

certificate_arn = data.aws_acm_certificate.cert[each.key].arn

endpoint_type = "REGIONAL"

security_policy = "TLS_1_2"

}

}

output "api_endpoint" {

value = aws_apigatewayv2_api.api_gateway.api_endpoint

}

Optional: Extend IaC Modules

Nitric’s pluggable architecture makes it possible for you to leave your application unchanged and deploy to any cloud, simply by changing the provider you’re using to fulfill the requests.

A significant advantage of using Terraform with Nitric is the ease with which you can extend and customize the modules that have already been defined. For example, to customize the timeout settings for your API Gateway, you’d simply override the "aws_apigatewayv2_stage” resource.

variable "timeout" {

description = "The timeout setting for the API Gateway"

type = number

default = 30

}

resource "aws_apigatewayv2_stage" "stage" {

api_id = aws_apigatewayv2_api.api_gateway.id

name = "$default"

auto_deploy = true

timeout_in_seconds = var.timeout

}

Once the provider is rebuilt, all projects that are deployed in the future will have an API gateway with a configured timeout.

Apply Deployment Scripts

Now that Nitric has a resource specification for your application and providers to fulfill the requirements for each of the resources you intend to use, the last step is to bring them together with a deployment engine. The deployment engine is responsible for handling containerization and creating end state deployment scripts that will actually provision the necessary infrastructure.

Nitric Handles Containerization

The Nitric Framework parses the resource specification looking for handlers such as API routes, scheduled jobs and event subscriptions. These are basically units of executable code in your application.

Images are programmatically created using the Docker SDK from these handlers and containerized with the lightweight runtime plugins (described above) to enable runtime execution capabilities like bucket.write(), topic.subscribe(). This step in the automation process is also customizable.

Nitric Generates Deployment Scripts

Continuing our example above using the Terraform provider, now the Nitric framework parses the resource specification – collecting the resources, policies and configuration – and begins to compose a Terraform project out of the Terraform modules for your targeted cloud. This orchestration is made possible by leveraging CDKTF to compose a Terraform configuration file.

The output Terraform is rather long (you can export your own quite easily by using the Nitric CLI), but here is a snippet of the configuration that will be used to provision our storage bucket as an AWS S3 instance since we used an AWS provider.

"bucket_images_bucket": {

"//": {

"metadata": {

"path": "hello-world-tf/bucket_images_bucket",

"uniqueId": "bucket_images_bucket"

}

},

"bucket_name": "images_bucket",

"notification_targets": {

},

"source": "./assets/__cdktf_module_asset_26CE565C/nitric_modules/bucket",

"stack_id": "${module.stack.stack_id}"

},

"policy_9e656b00724983588e9aec04820d84f7": {

"//": {

"metadata": {

"path": "hello-world-tf/policy_9e656b00724983588e9aec04820d84f7",

"uniqueId": "policy_9e656b00724983588e9aec04820d84f7"

}

},

"actions": [

"s3:PutObject",

"s3:DeleteObject"

],

"principals": {

"hello-world_services-hello:Service": "${module.service_hello-world_services-hello.role_name}"

},

"resources": [

"${module.bucket_images_bucket.bucket_arn}",

"${module.bucket_images_bucket.bucket_arn}/*"

],

"source": "./assets/__cdktf_module_asset_26CE565C/nitric_modules/policy"

},

As mentioned previously, the open source edition of Nitric currently has IaC provider support for Terraform and Pulumi. When using Pulumi, the deployment is highly streamlined. There are no output artifacts produced and therefore provisioning occurs immediately when you run the ‘nitric up’ command.

With the Terraform providers, however, the ‘nitric up’ command will trigger the Nitric framework to generate a Terraform project that plugs into a deployment workflow the same way it would if the scripts were defined manually. This means that the tools that teams use to do security scans, static analysis or custom state management are all available.

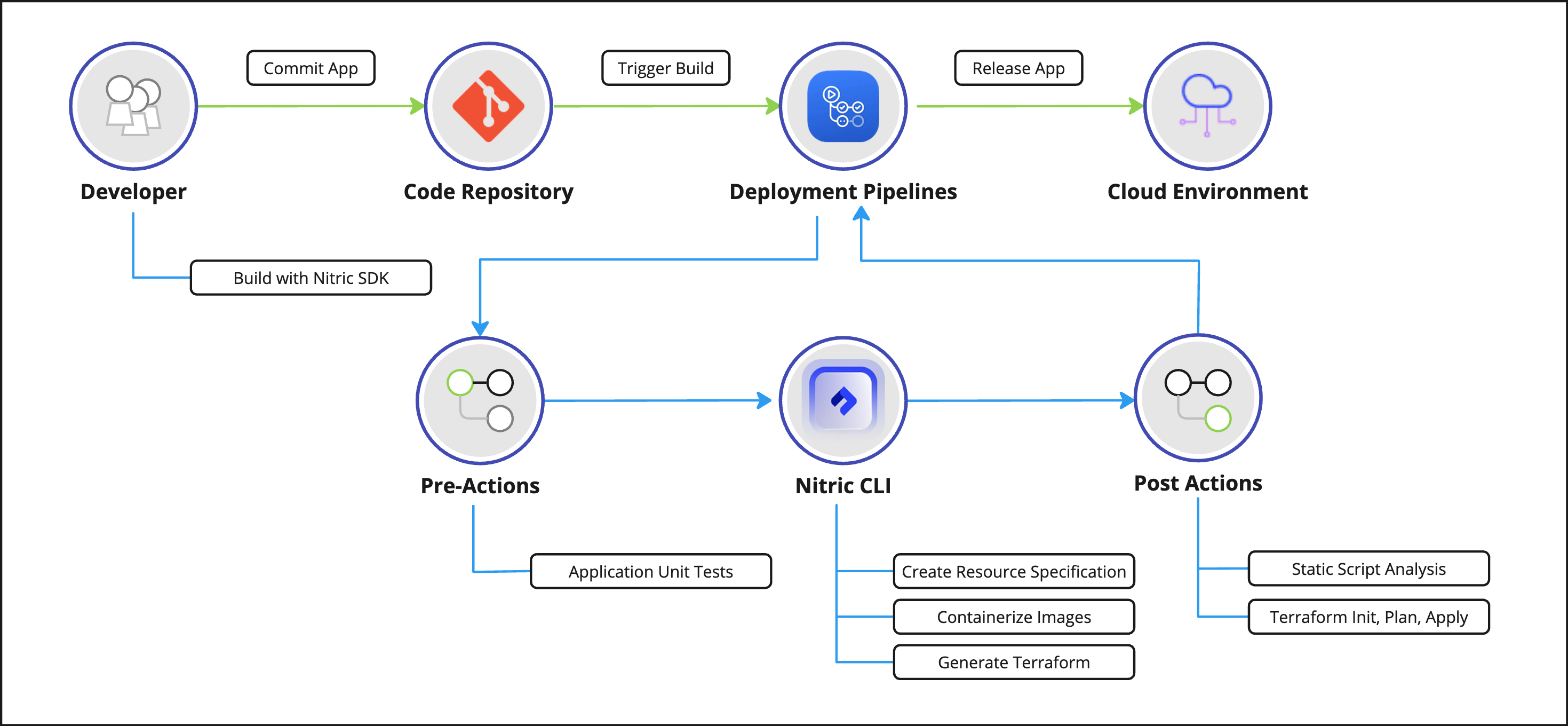

This workflow details the steps taken from code commit to cloud deployment.

Result: Infrastructure Transparency

While Nitric's automation and abstraction might initially seem like magic, the framework’s thoughtful design makes it possible to observe and adjust the details all along the way. By inferring requirements directly from application code and leveraging modular, pluggable architecture, Nitric provides transparency and control over your infrastructure. Plus, you gain a streamlined and efficient process from writing your application to deploying it in the cloud, ensuring consistency and ease of use for your team. It's about applying good design principles and using powerful tools like Terraform to maintain flexibility and extensibility. This approach demystifies infrastructure management, making it accessible without sacrificing the control and customization developers need.

We’d love to know if these details helped you understand the workings of Nitric’s abstraction. Reach out in Discord to share your feedback and ask any questions.